Building Resilient Open Source Infrastructure: A Deep Dive into Gentoo Mirroring and DevOps

Introduction: The Imperative of Infrastructure Independence

In the rapidly evolving landscape of open source software, the stability of public infrastructure is often taken for granted. Recent discussions within the community have highlighted the fragility of “public goods”—the free hosting, bandwidth, and build farms provided by universities and non-profits that sustain major distributions. While Linux news often focuses on the latest kernel release or desktop environment polish, the backbone of distributions like Gentoo news, Debian news, and Arch Linux news relies heavily on a decentralized network of mirrors. When key infrastructure providers face funding challenges or operational shifts, the ripple effects are felt across the entire ecosystem, from Ubuntu news to niche projects like Void Linux news.

For users of source-based distributions, this reality is particularly acute. Unlike binary-centric distributions such as Fedora news or Red Hat news, Gentoo requires the downloading of source code “distfiles” and frequent synchronization of the Portage tree. If the primary mirrors become unavailable or throttled, the user experience degrades significantly. This article explores the technical necessity of taking open source infrastructure seriously. We will delve into building resilient local mirrors, optimizing package management through automation, and employing modern DevOps practices—relevant whether you are managing Linux server news environments, Linux desktop news workstations, or complex Kubernetes Linux news clusters.

By understanding how to decouple from reliance on a single point of failure, administrators can ensure continuity not just for Gentoo, but for any Linux deployment, be it Rocky Linux news, AlmaLinux news, or Alpine Linux news. We will look at practical implementations using Python and Bash, and discuss how tools like Docker Linux news and Ansible news play a pivotal role in modern repository management.

Section 1: The Architecture of Source-Based Distribution Mirroring

Understanding the Portage Workflow

To appreciate the need for resilient infrastructure, one must understand how Gentoo’s package manager, Portage, operates compared to apt news or dnf news. When a user issues an emerge command, the system must first synchronize the ebuild repository (typically via rsync or git) and then download the actual source code tarballs. This places a unique load on mirrors. While Linux Mint news or Pop!_OS news mirrors serve static binary blobs, source mirrors must retain vast archives of historical source code versions.

In a robust enterprise or enthusiast setup, relying solely on the default `rsync.gentoo.org` rotation is insufficient. Network latency, upstream outages, or the aforementioned reduction in public hosting capacity can halt Linux CI/CD news pipelines. The first step in mitigation is configuring `make.conf` to utilize a tiered mirroring strategy. This involves defining a robust list of `GENTOO_MIRRORS` for source files and configuring a local rsync proxy.

Configuring Resilient Mirror Priorities

The following example demonstrates how to configure Portage to prioritize a local mirror (for speed and reliability) while failing over to a geographically distributed list of public mirrors. This configuration is essential for Linux administration news best practices.

# /etc/portage/make.conf

# 1. Primary: Local Mirror (LAN or private cloud)

# 2. Secondary: Reliable Tier-1 mirrors (e.g., Kernel.org)

# 3. Tertiary: The global rotation as a fallback

GENTOO_MIRRORS="

http://192.168.1.50/gentoo/

https://mirrors.kernel.org/gentoo/

http://distfiles.gentoo.org/

"

# Advanced Rsync Configuration

# We use a custom rsync command to ensure timeouts are handled gracefully

# and to exclude unnecessary metadata to save bandwidth.

PORTAGE_RSYNC_EXTRA_OPTS="--timeout=60 --exclude-from=/etc/portage/rsync_excludes"

# Parallel fetching to maximize throughput on high-bandwidth connections

FETCHCOMMAND="curl --retry 3 --connect-timeout 60 -f -L -o \"\${DISTDIR}/\${FILE}\" \"\${URI}\""

RESUMECOMMAND="curl -C - --retry 3 --connect-timeout 60 -f -L -o \"\${DISTDIR}/\${FILE}\" \"\${URI}\""This setup ensures that if a specific university mirror goes offline, your system automatically attempts the next source. This concept applies equally to Manjaro news or openSUSE news users tweaking their respective mirror lists. Furthermore, leveraging tools like `curl` with retry logic improves resilience against transient network issues, a common topic in Linux networking news.

Section 2: Implementing a Private Mirror with Docker and Nginx

Containerizing Infrastructure

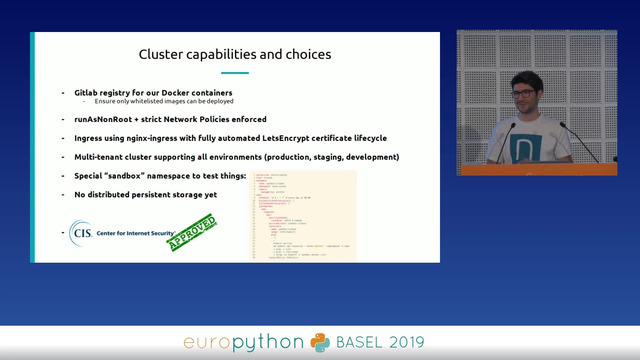

With the rise of Linux containers news, hosting a local mirror has never been easier. Instead of dedicating a bare-metal server, we can deploy a lightweight mirror using Docker Linux news. This is particularly useful for teams using Linux DevOps news workflows where reproducible environments are key. A private mirror not only insulates you from public outages but also significantly reduces bandwidth usage if you have multiple Gentoo machines (or LXC news containers) on the same network.

We will use Nginx Linux news to serve the files via HTTP and a simple cron-based container to sync the files via rsync. This setup can be adapted for CentOS news or Ubuntu news repositories as well.

The Docker Compose Implementation

Below is a comprehensive `docker-compose.yml` setup that creates a persistent volume for distfiles and serves them. It includes a health check to ensure the web server is responsive, a standard practice in Linux monitoring news.

version: '3.8'

services:

gentoo-mirror:

image: nginx:alpine

container_name: local_gentoo_mirror

restart: always

ports:

- "8080:80"

volumes:

- gentoo_data:/usr/share/nginx/html/gentoo

- ./nginx.conf:/etc/nginx/conf.d/default.conf:ro

networks:

- mirror_net

mirror-syncer:

image: alpine:latest

container_name: mirror_syncer

depends_on:

- gentoo-mirror

volumes:

- gentoo_data:/data/gentoo

# Script to sync every 4 hours using rsync

command: >

sh -c "apk add --no-cache rsync &&

while true; do

echo 'Starting sync...';

rsync -avz --delete --bwlimit=5000 rsync://rsync.us.gentoo.org/gentoo-distfiles/ /data/gentoo/;

echo 'Sync complete. Sleeping for 4 hours...';

sleep 14400;

done"

networks:

- mirror_net

volumes:

gentoo_data:

networks:

mirror_net:This configuration highlights the intersection of Linux virtualization news and package management. By using a separate syncer container, we decouple the fetching logic from the serving logic. The `–bwlimit` flag is crucial; it ensures your mirroring activities do not saturate your internet connection, a polite practice when consuming public goods. This approach is scalable; you could easily add Prometheus news and Grafana news exporters to monitor the disk usage and sync status, bringing Linux observability news into your home lab or enterprise environment.

Section 3: Advanced Automation and Health Checking

Python for Mirror Latency Analysis

Simply having a list of mirrors isn’t enough; you need to know which ones are performing well. In the world of Linux programming news, Python is the glue that holds infrastructure together. Whether you are managing Linux cloud news on AWS or bare metal, automating the selection of the fastest mirror can save hours of compile time.

The following Python script analyzes a list of mirrors, checks their TCP latency, and verifies if a specific file exists (to ensure the mirror is up-to-date). This is similar to logic found in arch linux news tools like `reflector` but tailored for custom deployments. It utilizes standard libraries, making it portable across Linux version control news systems.

import socket

import time

import urllib.request

from urllib.error import URLError

MIRRORS = [

"http://distfiles.gentoo.org",

"http://mirrors.kernel.org/gentoo",

"http://ftp.osuosl.org/pub/gentoo",

"http://www.gtlib.gatech.edu/pub/gentoo"

]

def check_mirror_latency(url):

"""

Checks the connection latency to the mirror's host.

"""

host = url.split("//")[1].split("/")[0]

port = 80

start_time = time.time()

try:

sock = socket.create_connection((host, port), timeout=2)

sock.close()

end_time = time.time()

return (end_time - start_time) * 1000 # Convert to ms

except socket.error:

return float('inf')

def verify_mirror_content(url):

"""

Verifies if the mirror actually contains a recent timestamp file.

"""

target_file = f"{url}/releases/timestamp.x"

try:

with urllib.request.urlopen(target_file, timeout=3) as response:

if response.status == 200:

return True

except URLError:

return False

return False

def find_best_mirror(mirror_list):

print(f"Analyzing {len(mirror_list)} mirrors...")

results = []

for mirror in mirror_list:

latency = check_mirror_latency(mirror)

if latency < 1000: # Only check content if latency is reasonable

is_valid = verify_mirror_content(mirror)

if is_valid:

results.append((mirror, latency))

print(f"[OK] {mirror}: {latency:.2f}ms")

else:

print(f"[FAIL] {mirror}: Content verification failed")

else:

print(f"[SLOW/DOWN] {mirror}")

# Sort by latency

results.sort(key=lambda x: x[1])

if results:

return results[0][0]

return None

if __name__ == "__main__":

best = find_best_mirror(MIRRORS)

if best:

print(f"\nRecommended Mirror: {best}")

# In a real scenario, you might write this to make.conf automatically

else:

print("\nNo valid mirrors found.")This script exemplifies the power of Python Linux news in system administration. By integrating such scripts into Ansible news playbooks or SaltStack news states, you can dynamically update your configuration based on real-time network conditions. This is critical when dealing with Linux edge computing news where network topology might change frequently.

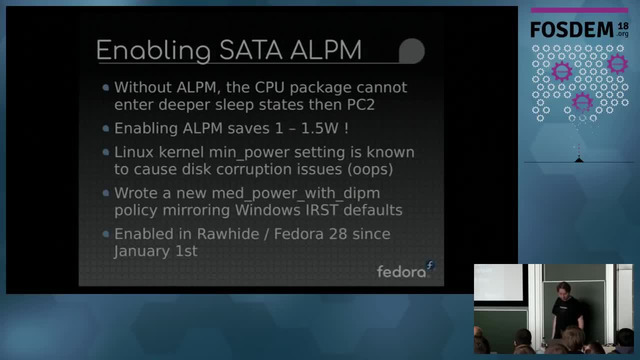

Filesystem Considerations: ZFS and Btrfs

When hosting a mirror or a large compilation server, the underlying filesystem matters. Linux filesystems news frequently debates the merits of ext4 news versus modern Copy-on-Write (CoW) filesystems. For a mirror, ZFS news or Btrfs news offers transparent compression, which can significantly reduce the storage footprint of text-based source code and logs. Furthermore, snapshots provide an instant recovery point if a sync corruption occurs—a feature invaluable for Linux backup news strategies.

Section 4: Best Practices and Security in a Decentralized Era

Verifying Integrity

As we move away from centralized authorities due to potential infrastructure constraints, security verification becomes paramount. Linux security news often highlights supply chain attacks. Gentoo provides strong GPG signing for its repositories. When setting up your own infrastructure, ensure that `gemato` (Gentoo's GPG verification tool) is correctly configured. Never disable GPG checks in `make.conf` simply because a mirror is throwing errors; switch mirrors instead.

Distributed Compiling with Distcc

If public binary caches (binhosts) become unreliable, you will be compiling more locally. To mitigate the time cost, Linux performance news enthusiasts recommend `distcc`. This allows you to offload compilation tasks to other machines on your network. A cluster of older machines running Lubunutu news or Xubuntu news can contribute CPU cycles to a main Gentoo workstation.

Infrastructure as Code (IaC)

Treat your mirror configuration and client setups as code. Use Git Linux news to version control your `/etc/portage` directory. Tools like Terraform Linux news can provision the cloud instances for your mirrors, while Puppet news or Chef news can enforce the configuration. This ensures that if you need to migrate from AWS Linux news to DigitalOcean Linux news due to cost or policy changes, the transition is seamless.

Broader Linux Ecosystem Implications

The lessons learned from Gentoo's infrastructure challenges apply universally. Whether you are running Kali Linux news for security audits, Parrot OS news for privacy, or managing enterprise Oracle Linux news databases, dependency on external repos is a risk.

Users of NixOS news and Guix are already familiar with the benefits of caching and reproducible builds. Similarly, Flatpak news, Snap packages news, and AppImage news aim to bundle dependencies to avoid "dependency hell," but they still rely on central delivery mechanisms. A hybrid approach—using local caching proxies for apt news (like `apt-cacher-ng`) or pacman news—is a robust strategy for any Linux systemd news based OS.

Conclusion

The stability of the open source ecosystem relies on a shared responsibility between public institutions and the community. While news regarding the challenges faced by long-standing hosting providers like OSUOSL is concerning, it serves as a critical wake-up call. We cannot treat open source infrastructure as an infinite, immutable resource. For users of Gentoo news, taking ownership of your infrastructure via local mirroring, intelligent failover scripts, and containerized services is not just a technical exercise—it is a survival strategy.

By implementing the code examples provided—configuring robust `make.conf` failovers, deploying Dockerized mirrors, and automating latency checks—you contribute to the resilience of your own systems and reduce the strain on public goods. Whether you are a casual user of Linux Mint news or a kernel hacker following Linux kernel news, the principles of redundancy and decentralization remain the bedrock of a healthy open source environment. As we look toward the future of Linux cloud news and Linux IoT news, let us build systems that are designed to withstand the inevitable shifts in the digital landscape.