The Evolution of Container-Optimized Linux on DigitalOcean: A Deep Dive into Immutable Infrastructure

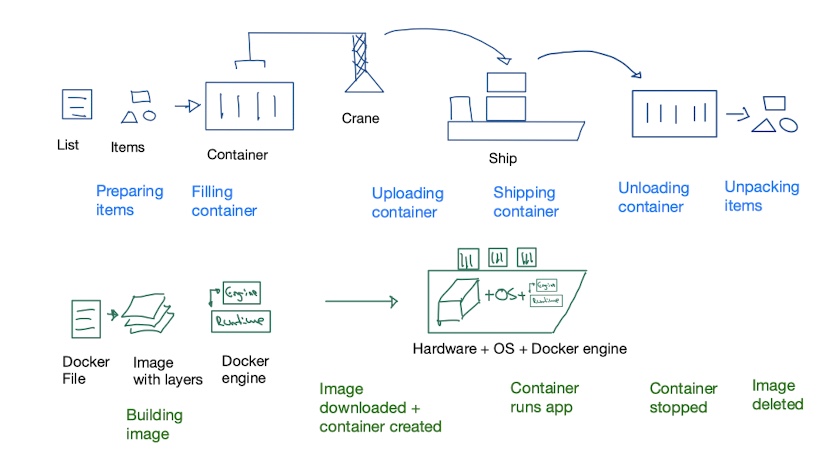

In the rapidly evolving landscape of DigitalOcean Linux news, the paradigm of server management is undergoing a fundamental transformation. For years, the standard deployment model involved spinning up a Virtual Private Server (VPS) running a general-purpose distribution—be it the stability of Debian news, the user-friendliness of Ubuntu news, or the enterprise rigidity of CentOS news. However, the integration of container-optimized operating systems (historically pioneered by CoreOS and now represented by successors like Fedora CoreOS and Flatcar) into cloud platforms like DigitalOcean represents a significant maturity in cloud-native computing.

This shift is not merely about a new flavor of Linux; it is about a philosophical change in Linux administration news. We are moving away from maintaining long-lived servers (pets) toward immutable infrastructure (cattle). This article explores the technical intricacies of deploying container-centric Linux distributions on DigitalOcean, leveraging technologies like Docker, systemd, and etcd to build resilient, scalable clusters. Whether you follow Red Hat news, Arch Linux news, or Alpine Linux news, understanding the mechanics of a minimal, container-focused OS is now a critical skill for modern DevOps engineers.

Section 1: The Architecture of Container-Optimized Linux

Unlike traditional distributions such as Linux Mint news or Pop!_OS news which focus on desktop environments like GNOME news or KDE Plasma news, container-optimized Linux distributions are designed to be headless and minimal. The primary goal is to provide just enough operating system to run containers.

The Read-Only Filesystem and Atomic Updates

One of the defining features of this architecture is the read-only /usr partition. This ensures that the core system binaries cannot be modified by running processes, significantly enhancing Linux security news. Updates are applied atomically. Instead of running apt-get upgrade (familiar to those following apt news) or dnf update (a staple of Fedora news), the system downloads a full image of the next OS version to a passive partition and reboots into it.

This approach mitigates the “dependency hell” often found in Gentoo news or Slackware news circles where library conflicts break applications. In a container-optimized environment, applications are decoupled from the host OS libraries, running entirely within Docker Linux news or Podman news containers.

Cloud-Config and Ignition: Bootstrapping Identity

When deploying on DigitalOcean, the “User Data” field becomes the primary interface for configuration. Unlike Ansible news or Chef news which typically run after the machine is accessible via SSH, tools like Cloud-Init or Ignition configure the machine during the very first boot. This is essential for Linux automation news.

Below is a practical example of a Cloud-Config YAML file. This configuration sets up a user, injects an SSH key, and configures a systemd unit to start a simple web service. This bypasses the need for manual Linux terminal news interaction.

#cloud-config

users:

- name: devops_user

groups:

- sudo

- docker

shell: /bin/bash

ssh-authorized-keys:

- ssh-rsa AAAAB3NzaC1yc2E... your-public-key ...

write_files:

- path: /etc/sysctl.d/99-custom-networking.conf

permissions: '0644'

content: |

net.ipv4.ip_forward = 1

net.ipv4.conf.all.accept_redirects = 0

coreos:

units:

- name: "docker-nginx.service"

command: "start"

content: |

[Unit]

Description=Nginx Container

After=docker.service

Requires=docker.service

[Service]

TimeoutStartSec=0

ExecStartPre=-/usr/bin/docker kill nginx-web

ExecStartPre=-/usr/bin/docker rm nginx-web

ExecStartPre=/usr/bin/docker pull nginx:alpine

ExecStart=/usr/bin/docker run --name nginx-web -p 80:80 nginx:alpine

ExecStop=/usr/bin/docker stop nginx-web

[Install]

WantedBy=multi-user.targetIn this example, we see the convergence of systemd news and container management. The service definition ensures that the Nginx container is treated as a first-class citizen of the OS, automatically restarting if it fails, a concept crucial for high availability.

AI code generation on computer screen – Are AI data poisoning attacks the new software supply chain attack …

Section 2: Service Discovery and Distributed Configuration

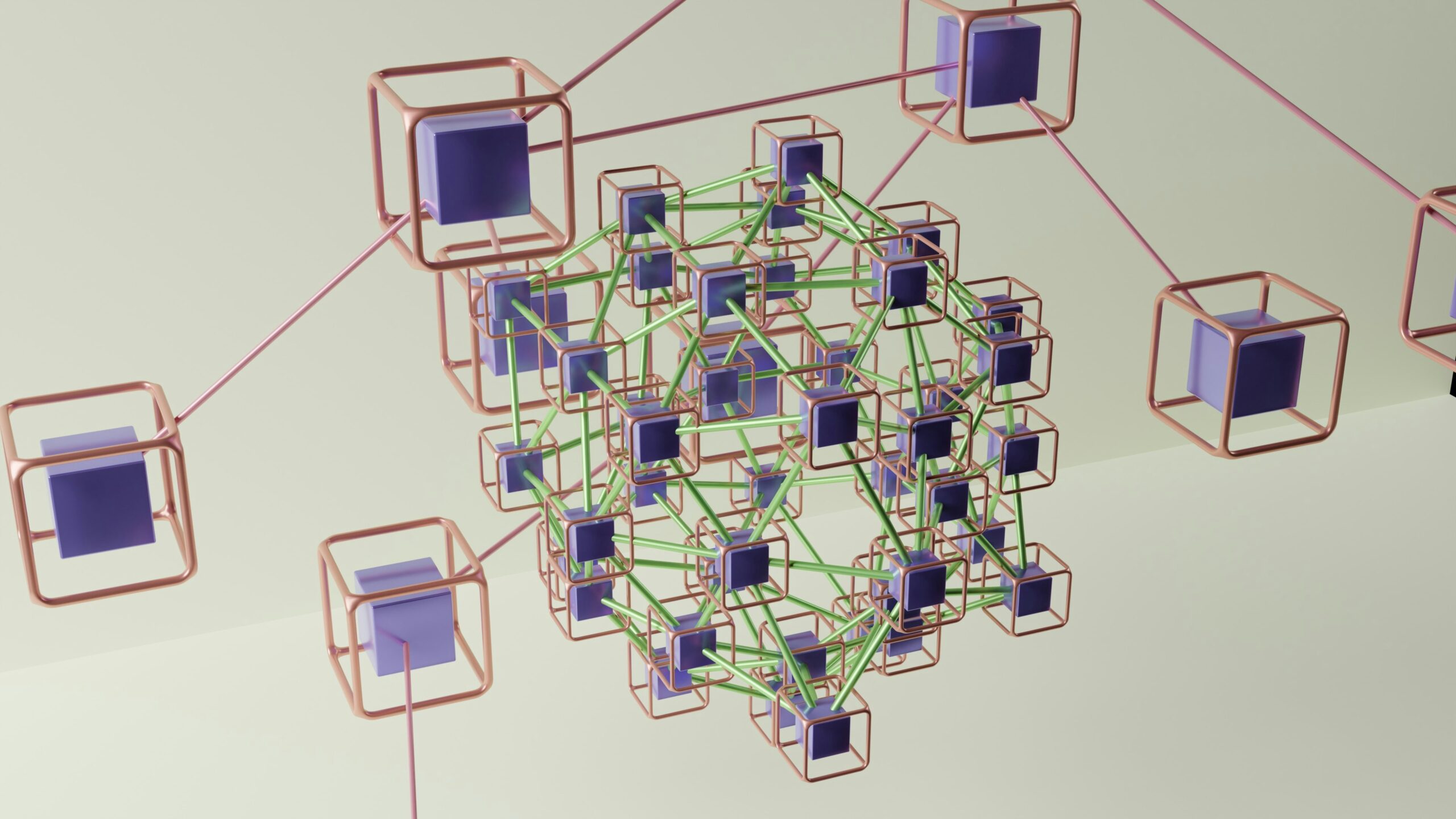

In a cluster of DigitalOcean droplets, static IP addressing becomes a bottleneck. As noted in Kubernetes Linux news and Linux networking news, dynamic environments require dynamic discovery. This is where etcd comes into play. Etcd is a distributed key-value store that provides a reliable way to store data across a cluster of machines.

While Redis Linux news and PostgreSQL Linux news focus on application data, etcd focuses on cluster coordination. It allows instances to announce their presence and configuration to the group. This is fundamental for Linux clustering news.

Interacting with etcd Programmatically

To leverage the power of a distributed cluster on DigitalOcean, developers often write custom tooling to interface with the cluster state. Below is a Python script using the python-etcd3 library. This script could run on a worker node to register its availability or retrieve configuration flags, illustrating the intersection of Python Linux news and infrastructure.

import etcd3

import socket

import time

import json

# Connect to the local etcd instance (or a remote cluster endpoint)

client = etcd3.client(host='127.0.0.1', port=2379)

def register_service(service_name, port):

"""

Registers the current node's IP and port to etcd

with a Time-To-Live (TTL) lease.

"""

hostname = socket.gethostname()

local_ip = socket.gethostbyname(hostname)

key = f"/services/{service_name}/{hostname}"

value = json.dumps({

"ip": local_ip,

"port": port,

"status": "active",

"timestamp": time.time()

})

# Create a lease of 60 seconds

lease = client.lease(60)

print(f"Registering {key} -> {value}")

client.put(key, value, lease=lease)

return lease

def watch_config_changes():

"""

Watch for changes in global configuration

"""

events_iterator, cancel = client.watch_prefix('/config/global/')

print("Watching for config changes...")

for event in events_iterator:

print(f"Configuration update detected: {event.key.decode('utf-8')} = {event.value.decode('utf-8')}")

# Logic to reload application configuration would go here

if __name__ == "__main__":

try:

# Register this node as a web worker

my_lease = register_service("web-worker", 8080)

# Keep the lease alive (heartbeat)

while True:

client.refresh_lease(my_lease.id)

time.sleep(20)

except KeyboardInterrupt:

print("Stopping service registration...")This script demonstrates the “heartbeat” pattern common in Linux DevOps news. If the node crashes, the lease expires, and the key is automatically removed from etcd, alerting the load balancer (like HAProxy news or Nginx load balancing news) to stop sending traffic to that node.

Section 3: Advanced Orchestration and Infrastructure as Code

While manual configuration via the DigitalOcean dashboard is possible, scaling requires Terraform Linux news. Infrastructure as Code (IaC) allows you to define the state of your infrastructure in configuration files. This is particularly relevant when managing container-optimized OSs because the OS itself is immutable; all changes must be injected at provisioning time.

Whether you are managing Rocky Linux news, AlmaLinux news, or Oracle Linux news, Terraform provides a unified workflow. However, for container Linux types, the user_data attribute is critical.

Automating Droplet Deployment with Terraform

The following Terraform HCL (HashiCorp Configuration Language) snippet demonstrates how to deploy a cluster of nodes on DigitalOcean, injecting the cloud-config we defined earlier. This touches upon Linux cloud news and Linux automation news.

AI code generation on computer screen – AIwire – Covering Scientific & Technical AI

terraform {

required_providers {

digitalocean = {

source = "digitalocean/digitalocean"

version = "~> 2.0"

}

}

}

provider "digitalocean" {

token = var.do_token

}

resource "digitalocean_tag" "cluster_tag" {

name = "k8s-worker"

}

resource "digitalocean_droplet" "worker_node" {

count = 3

image = "coreos-stable" # Or fedora-coreos, rancheros, etc.

name = "worker-${count.index + 1}"

region = "nyc3"

size = "s-2vcpu-4gb"

tags = [digitalocean_tag.cluster_tag.id]

ssh_keys = [var.ssh_fingerprint]

# Enable private networking for internal etcd communication

vpc_uuid = var.vpc_id

# Pass the cloud-config file

user_data = file("${path.module}/cloud-config.yaml")

connection {

type = "ssh"

user = "core"

private_key = file(var.pvt_key)

host = self.ipv4_address

}

provisioner "remote-exec" {

inline = [

"echo 'Waiting for cloud-init to finish...'",

"while [ ! -f /var/lib/cloud/instance/boot-finished ]; do sleep 1; done",

"docker info"

]

}

}

output "droplet_ips" {

value = digitalocean_droplet.worker_node.*.ipv4_address

}This code highlights the importance of Linux networking news (VPC configuration) and Linux SSH news (key management). By using remote-exec, we can verify that the Docker daemon is active immediately after provisioning, ensuring the node is ready to accept workloads from Kubernetes Linux news or Docker Swarm news orchestrators.

Section 4: Best Practices, Security, and Monitoring

Running a container-optimized OS on DigitalOcean requires a shift in maintenance strategies. Traditional tools like Timeshift news or Rsync news for backups are less relevant for the OS itself, as the OS is ephemeral. Instead, the focus shifts to persistent storage (like Linux LVM news or DigitalOcean Volumes) and data backup.

Security Hardening and Firewalls

Even on a minimal OS, security is paramount. Linux firewall news suggests a move toward nftables, but many container systems still rely on iptables due to Docker’s networking stack. It is crucial to configure firewalls to only allow traffic on necessary ports (e.g., 22 for SSH, 2379/2380 for etcd, 80/443 for web traffic).

Furthermore, Linux SELinux news plays a huge role here. Distributions like Fedora CoreOS enable SELinux by default to isolate containers. Disabling it is a common pitfall that exposes the host to container breakout attacks.

AI code generation on computer screen – AltText.ai: Alt Text Generator Powered by AI

Monitoring with Systemd Timers

Instead of using cron news, modern Linux systems prefer systemd timers for scheduled tasks. This provides better logging via journalctl news and tighter integration with the init system. Below is a bash script designed to be run by a systemd timer to perform a health check and clean up unused Docker resources (pruning), a vital task for Linux memory management news.

#!/bin/bash

# maintenance-check.sh

# A script to monitor disk usage and prune docker resources

THRESHOLD=85

PARTITION="/var/lib/docker"

current_usage=$(df -h "$PARTITION" | awk 'NR==2 {print $5}' | sed 's/%//')

echo "[$(date)] Checking disk usage for $PARTITION. Current: ${current_usage}%"

if [ "$current_usage" -gt "$THRESHOLD" ]; then

echo "WARNING: Disk usage above threshold. Initiating Docker prune."

# Remove stopped containers

docker container prune -f

# Remove unused images

docker image prune -a -f

# Remove unused volumes (Be careful with this in production!)

# docker volume prune -f

new_usage=$(df -h "$PARTITION" | awk 'NR==2 {print $5}' | sed 's/%//')

echo "Cleanup complete. New usage: ${new_usage}%"

# Log to system journal with high priority

logger -p user.crit "Docker cleanup triggered. Usage dropped from ${current_usage}% to ${new_usage}%"

else

echo "Disk usage is within normal limits."

fi

# Check for zombie processes

zombies=$(ps aux | awk '{print $8}' | grep -c 'Z')

if [ "$zombies" -gt 0 ]; then

echo "ALERT: Detected $zombies zombie processes."

logger -p user.warn "Zombie processes detected on node."

fiThis script integrates concepts from Linux shell scripting news (awk, sed, grep) and Linux processes news (ps). By logging to the system journal, these events can be picked up by external monitoring solutions like Prometheus news or the ELK Stack news (Elasticsearch, Logstash, Kibana).

Conclusion

The availability of container-optimized Linux distributions on DigitalOcean marks a significant step forward for developers and system administrators. It bridges the gap between simple VPS hosting and enterprise-grade Linux orchestration news. By embracing the principles of immutable infrastructure, declarative configuration via Cloud-Init, and robust service discovery with etcd, users can build systems that are self-healing and easily scalable.

Whether you are experimenting with Linux IoT news on the edge or building massive clusters for Linux web servers news, the combination of DigitalOcean’s infrastructure and modern Linux distributions provides a powerful foundation. As Linux kernel news continues to deliver performance improvements like BPF and io_uring, and as Linux open source news drives innovation in container runtimes, the ecosystem will only become more efficient. The key takeaway is to stop treating servers as unique entities and start treating them as interchangeable resources defined strictly by code.