The Evolution of Linux Logging: From Syslog to Structured Observability

In the rapidly evolving landscape of open source operating systems, staying abreast of Linux logs news and logging methodologies is crucial for system administrators, DevOps engineers, and security analysts. As distributions like Debian news, Red Hat news, and Ubuntu news continue to modernize their underpinnings, the way systems handle data recording has shifted from simple text files to complex, structured binary formats. The recent discourse in the community regarding data ownership and platform independence—often highlighted in Linux open source news—parallels the technical shift towards self-hosted, centralized logging solutions that ensure data sovereignty and granular observability.

Logging is the heartbeat of any Linux infrastructure. Whether you are managing a single laptop running Arch Linux news or orchestrating a massive cluster with Kubernetes Linux news, the ability to interpret logs dictates your ability to troubleshoot failures and secure your environment. This article delves deep into the current state of Linux logging, exploring the transition from traditional syslog daemons to the modern systemd news journal, and how to implement robust logging strategies in an era dominated by Linux cloud news and containerization.

The Modern Standard: Systemd Journal and Binary Logs

For decades, Linux administration news focused on text-based log files located in /var/log. However, with the widespread adoption of systemd across major distributions—including CentOS news, Fedora news, and openSUSE news—the paradigm has shifted to systemd-journald. Unlike traditional logging, the journal stores data in a binary format. This allows for structured metadata, indexing, and faster querying, which is essential when dealing with the high-velocity data streams discussed in Linux performance news.

The primary tool for interacting with this data is journalctl. Recent Linux troubleshooting news highlights how mastering this tool can significantly reduce incident response times. Instead of using grep news or awk news to parse unstructured text, administrators can filter by specific fields such as systemd units, time ranges, or priority levels. This is particularly useful for Linux DevOps news professionals who need to correlate events across different services, such as Docker Linux news containers and the host Linux kernel news.

Below is a practical example of how to utilize journalctl for advanced filtering. This approach is more efficient than legacy text parsing and is a staple in modern RHCSA news and LFCS news certification curriculums.

# 1. View logs for a specific service (e.g., Nginx) since the last boot

# This is crucial for checking web server status after a restart.

journalctl -u nginx.service -b

# 2. View logs with a specific priority level (e.g., Error and above)

# Useful for filtering out noise and focusing on critical failures.

journalctl -p err -b

# 3. View kernel logs specifically (modern replacement for dmesg in some contexts)

# Essential for diagnosing hardware or driver issues discussed in Linux drivers news.

journalctl -k

# 4. Follow logs in real-time (similar to tail -f) with verbose output

# This provides all metadata fields, useful for debugging SELinux or AppArmor contexts.

journalctl -f -o verbose

# 5. Vacuum archived logs to free up disk space (retain only last 2 days)

# A vital maintenance task for Linux disk management.

journalctl --vacuum-time=2dUnderstanding these commands is vital. For instance, when troubleshooting Linux networking news issues involving NetworkManager news or WireGuard news, the verbose output can reveal hidden dependency failures that a standard text log might obscure.

Implementation: Hybrid Logging with Rsyslog and Text Files

Apple TV 4K with remote – New Design Amlogic S905Y4 XS97 ULTRA STICK Remote Control Upgrade …

Despite the dominance of systemd, traditional text logs remain relevant. Many compliance standards and legacy analysis tools—often discussed in Linux security news—require plain text formats. Consequently, most distributions like Debian news and Linux Mint news employ a hybrid approach. They run systemd-journald to capture data and rsyslog to write that data to standard files like /var/log/syslog or /var/log/auth.log.

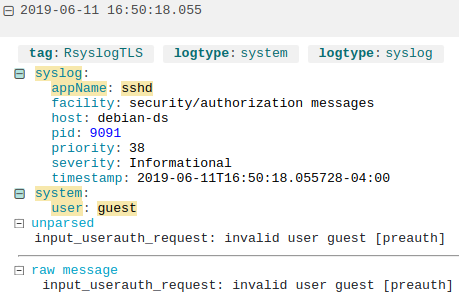

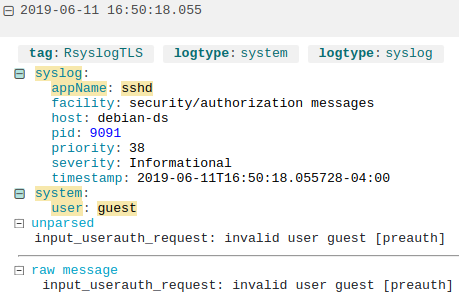

Rsyslog news continues to feature updates regarding performance and TLS encryption, making it a robust choice for log forwarding. In a Linux enterprise news environment, you rarely want logs to exist only on the local machine. If a Linux server news instance is compromised or suffers a catastrophic filesystem failure (a topic frequent in Btrfs news and ZFS news discussions), local logs are lost. Therefore, configuring Rsyslog to forward logs to a central server is a best practice.

The following configuration snippet demonstrates how to set up a custom log filter in Rsyslog. This is useful for separating specific application logs, such as those from PostgreSQL Linux news or Apache Linux news, into their own files for easier auditing.

# /etc/rsyslog.d/99-custom-app.conf

# Define a template for the log format

# This ensures timestamps are high-precision, crucial for Linux forensics news.

$template CustomFormat,"%timestamp:::date-rfc3339% %hostname% %syslogtag% %msg%\n"

# Filter: Direct logs from the 'custom_app' facility to a specific file

# and stop processing them further (to avoid duplication in syslog).

if $programname == 'custom_app' then {

action(type="omfile" file="/var/log/custom_app.log" template="CustomFormat")

stop

}

# Forward critical authentication logs to a remote log server securely

# This is a standard recommendation in Linux security news and incident response.

authpriv.* action(type="omfwd"

target="192.168.1.50"

port="514"

protocol="tcp"

StreamDriver="gtls"

StreamDriverMode="1"

StreamDriverAuthMode="x509/name"

)This configuration highlights the flexibility of Linux logging. By leveraging Linux encryption news standards (TLS), administrators can ensure that sensitive data regarding OpenSSH news or sudo usage is transmitted securely, mitigating risks associated with Linux incident response news.

Advanced Techniques: Centralized Observability and Automation

As we move into the realm of Linux cloud news and microservices, viewing logs on a single server is insufficient. The trend in Linux DevOps news is towards observability stacks. Tools like the ELK Stack (Elasticsearch, Logstash, Kibana) or the PLG Stack (Promtail, Loki, Grafana) are becoming industry standards. This aligns with Prometheus news and Grafana news, where metrics and logs are correlated to provide a holistic view of system health.

For developers working with Python Linux news or Go Linux news, integrating logging directly into the application code to output structured JSON is preferred over unstructured text. JSON logs can be easily ingested by log aggregators without complex parsing rules. This practice is increasingly cited in Linux programming news as a prerequisite for cloud-native applications running on Alpine Linux news or CoreOS.

Here is a Python example demonstrating how to structure logs for modern observability pipelines. This script uses the standard logging library but formats the output as JSON, making it ready for ingestion by systems like Datadog, Splunk, or Loki.

import logging

import json

import sys

from datetime import datetime

class JsonFormatter(logging.Formatter):

"""

Custom formatter to output logs in JSON format.

Essential for modern Linux observability news and analytics.

"""

def format(self, record):

log_record = {

"timestamp": datetime.utcnow().isoformat(),

"level": record.levelname,

"message": record.getMessage(),

"module": record.module,

"process_id": record.process,

# Add context for Linux containers news (Docker/K8s)

"environment": "production",

"app_version": "1.2.0"

}

if record.exc_info:

log_record["exception"] = self.formatException(record.exc_info)

return json.dumps(log_record)

# Configure the logger

logger = logging.getLogger("DevOpsLogger")

logger.setLevel(logging.INFO)

# Handler to write to stdout (Best practice for Docker Linux news)

handler = logging.StreamHandler(sys.stdout)

handler.setFormatter(JsonFormatter())

logger.addHandler(handler)

# Generate log events

logger.info("Application started successfully on Linux kernel 6.5")

logger.warning("High memory usage detected", extra={"memory_usage": "85%"})

try:

x = 1 / 0

except ZeroDivisionError:

logger.error("Calculation failed", exc_info=True)By outputting JSON to standard out, this application adheres to the “Twelve-Factor App” methodology. In a Kubernetes Linux news environment, a daemon like Fluentd or Promtail would pick up this stream, parse the JSON, and make it searchable in Grafana. This seamless integration is what drives modern Linux automation news.

Apple TV 4K with remote – Apple TV 4K 1st Gen 32GB (A1842) + Siri Remote – Gadget Geek

Best Practices: Rotation, Retention, and Security

Effective log management is not just about collection; it is also about lifecycle management. Linux disk management is a frequent topic in Linux hardware news because unmanaged logs can fill up storage, causing Linux web servers news (like Nginx or Apache) to crash. The tool logrotate is the standard solution for this, handling the automatic rotation, compression, and removal of log files.

Furthermore, security is paramount. Logs often contain sensitive information. Linux SELinux news and AppArmor news frequently discuss restricting access to log files to prevent unauthorized modification—a technique used by attackers to cover their tracks. Ensuring that log files have strict permissions and are immutable to non-root users is a cornerstone of Linux security news.

Below is an optimized logrotate configuration. This setup is applicable whether you are running Rocky Linux news, AlmaLinux news, or Ubuntu news. It emphasizes compression and secure permissions.

# /etc/logrotate.d/custom-security-audit

/var/log/security-audit/*.log {

# Rotate logs daily

daily

# Keep 30 days of backlogs

rotate 30

# Compress old logs to save space (gzip)

compress

# Delay compression to the next rotation cycle

# This prevents issues if the program is still writing to the open file handle.

delaycompress

# If the log file is missing, do not generate an error

missingok

# Do not rotate if the file is empty

notifempty

# Set strict file permissions (Owner: root, Group: adm)

# Critical for compliance with Linux incident response news standards.

create 0640 root adm

# Execute a script after rotation (e.g., restart a service or send an alert)

postrotate

/usr/lib/rsyslog/rsyslog-rotate

# Example: Notify a monitoring endpoint about the rotation

echo "Logs rotated at $(date)" >> /var/log/rotation-history.log

endscript

}Monitoring and Alerting

Apple TV 4K with remote – Apple TV 4K iPhone X Television, Apple TV transparent background …

Finally, logs are useless if no one looks at them. Integrating log analysis with alerting tools is the final step in the pipeline. Using tools like Ansible news for configuration management, you can deploy agents that watch for specific patterns—such as repeated SSH failures (indicating a brute force attack) or database connection errors in MySQL Linux news logs. This proactive approach transforms logs from a forensic tool into a real-time monitoring asset.

Conclusion

The domain of Linux logs news is vast, encompassing everything from low-level kernel messages to high-level application traces. As the ecosystem diversifies with new distributions like EndeavourOS news and NixOS news, and as architectures evolve with Linux edge computing news and Linux IoT news, the fundamentals of logging remain constant: capture, structure, analyze, and secure.

By mastering journalctl, understanding the legacy power of rsyslog, and adopting modern JSON-based structured logging for cloud-native applications, administrators can ensure they maintain full visibility into their systems. Whether you are gaming on a Steam Deck news device or managing a Google Cloud Linux news instance, the logs tell the story of your system. Listening to that story is the key to stability and security in the open-source world.